Augmented, Virtual and Mixed Reality in Dentistry: A Narrative Review on the Existing Platforms and Future Challenges

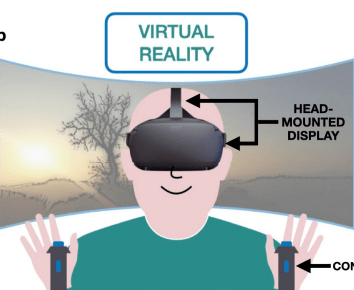

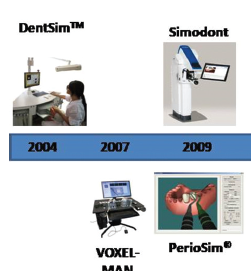

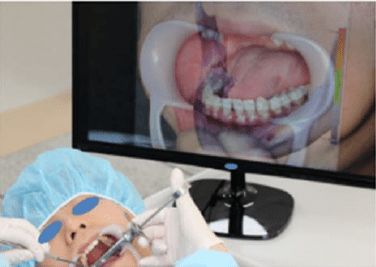

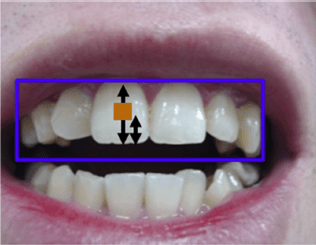

ABSTRACT Abstract: The recent advancements in digital technologies have led to exponential progress in dentistry. This narrative review aims to […]